- Home

- Blog

- Kubernetes

- Upgrading Kubernetes on EKS with Terraform

![]()

New Kubernetes versions are released multiple times per year, and you must upgrade your EKS cluster periodically to stay up to date. In this blog post we will go over the steps required to safely upgrade your production EKS cluster managed by Terraform.

At Blue Matador, we use Terraform to manage most of our AWS infrastructure, and our EKS cluster is no exception. We use the eks module, which provides a lot of functionality for managing your EKS cluster and worker nodes. The rest of this post will refer to this specific Terraform module for an EKS cluster upgrade, but many of the same steps will apply to clusters managed using CloudFormation, eksctl, or even the AWS console.

The upgrade process we will use results in very little disruption for your cluster, and is designed to be used on a production Kubernetes cluster. We will perform the following steps:

- Upgrade EKS cluster version

- Update system component versions

- Select a new AMI for worker nodes

- Create a new worker group for worker nodes

- Drain pods from the old worker nodes

- Clean up extra resources

Guide: Best practices when running Kubernetes >

Step 1: Upgrade EKS cluster version

First ensure that you are using a version of kubectl that is at least as high as the Kubernetes version you wish to upgrade to. You can install kubectl here.

Next, update the cluster_version in your eks_cluster module to the next version of EKS. Clusters managed by EKS can only be upgraded one minor version at a time, so if you are currently at 1.12, you can upgrade to 1.13. If you are on a lower version like 1.10, you could upgrade to 1.11, then 1.12, then 1.13. In our example we are upgrading from 1.12 to 1.13.

module "eks_cluster" {

version = "5.0.0"

source = "terraform-aws-modules/eks/aws"

cluster_version = "1.13"

...

}

When you run terraform plan you may see some changes that seem unrelated to upgrading the EKS cluster version. The EKS terraform module is updated often, and any recent upgrades to Terraform version 1.12 or the newest versions of the EKS module may cause some items to be renamed, which will show up as an update or a destroy and recreate. I highly recommend storing the kubeconfig and aws_auth_config files that are generated by this module in version control so you can always access them in case something goes wrong with the cluster update.

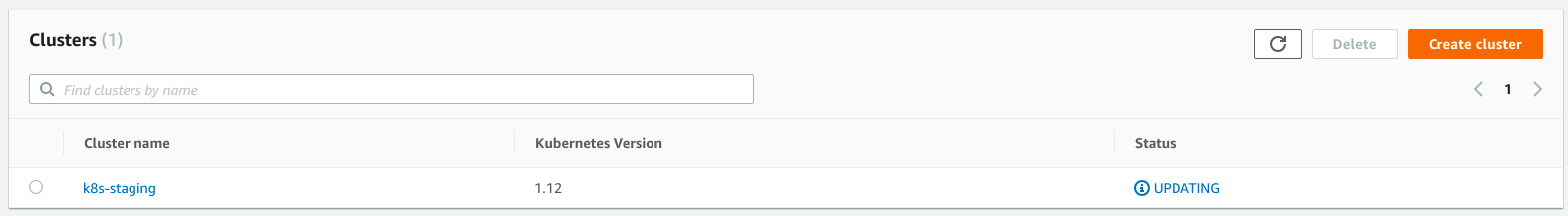

Once you are satisfied with the changes, run terraform apply and confirm the changes again. It will take as long as 20 minutes for the EKS cluster’s version to be updated, and you can track its progress in the AWS console or using the output from terraform. This step must be completed before continuing.

Step 2: Update other system components

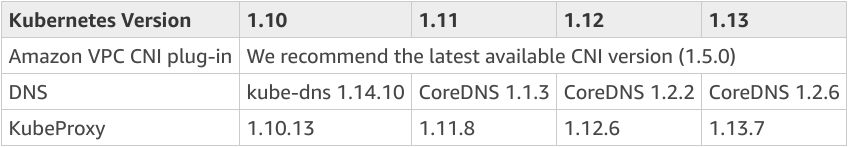

Now that your EKS cluster is on the new version, you need to update a few system components to newer versions. Follow this table to determine which versions to use:

Update Kube-Proxy

Kube-proxy can be updated by patching the DaemonSet with the newer image version:

kubectl patch daemonset kube-proxy \

-n kube-system \

-p '{"spec": {"template": {"spec": {"containers": [{"image": "602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/kube-proxy:v1.13.7","name":"kube-proxy"}]}}}}'

Update CoreDNS

Newer versions of EKS use CoreDNS as the DNS provider. To check if your cluster is using CoreDNS, run the following command:

kubectl get pod -n kube-system -l k8s-app=kube-dns

The pods in the output will start with coredns in the name if they are using CoreDNS. If your cluster is not running CoreDNS, follow the Amazon-provided instructions on this page to install CoreDNS at the correct version.

If your cluster was previously running CoreDNS, update it to the latest version for your version of Kubernetes in the table above:

kubectl set image --namespace kube-system deployment.apps/coredns \

coredns=602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/coredns:v1.2.6

Update Amazon VPC CNI

The Amazon VPC CNI is not explicitly tied to certain versions of Kubernetes, but its recommended you upgrade it to version 1.5.0 when you upgrade your Kubernetes version. To check your current version of the CNI use:

kubectl describe daemonset aws-node -n kube-system | grep Image | cut -d "/" -f 2

If your version is lower than 1.5.0, run the following command to update the DaemonSet to the newest configuration:

kubectl apply -f https://raw.githubusercontent.com/aws/amazon-vpc-cni-k8s/master/config/v1.5/aws-k8s-cni.yaml

Update GPU Support

If your cluster needs GPU support, you should update the NVIDIA plugin. You can use this command to update to the latest version:

kubectl apply -f https://raw.githubusercontent.com/NVIDIA/k8s-device-plugin/1.0.0-beta/nvidia-device-plugin.yml

Now with all of the system components upgraded to the new version of Kubernetes, it is time to update your worker nodes.

Step 3: Select a new AMI with Terraform

The first step is to use terraform to search for the newest AMI for your new version of Kubernetes and then create an encrypted copy for your worker nodes. This can be accomplished with the following terraform config:

data "aws_ami" "eks_worker_base_1_13" {

filter {

name = "name"

values = ["amazon-eks-node-1.13*"]

}

most_recent = true

# Owner ID of AWS EKS team

owners = ["602401143452"]

}

resource "aws_ami_copy" "eks_worker_1_13" {

name = "${data.aws_ami.eks_worker_base_1_13.name}-encrypted"

description = "Encrypted version of EKS worker AMI"

source_ami_id = "${data.aws_ami.eks_worker_base_1_13.id}"

source_ami_region = "us-east-1"

encrypted = true

tags = {

Name = "${data.aws_ami.eks_worker_base_1_13.name}-encrypted"

}

}

If you are upgrading to a version other than 1.13, simply change the filter value for the aws_ami data source. Run terraform apply and confirm the changes, then wait for the copied AMI to be available.

Step 4: Create a new worker group

To upgrade our worker nodes to the new version, we will create a new worker group of nodes at the new version, and then move our pods over to them. The first step is to add a new configuration block to your worker_groups configuration in terraform. Your configuration will be different depending on what features you are using of the eks module, and it is important that you copy your worker groups so that they match your old configuration. The only field we want to change is ami_id to reference our newly encrypted and copied AMI. Here is an example configuration that has variables for most of the options so they match with other worker groups in my terraform config:

{

name = "workers-1.13"

instance_type = var.worker_instance_type

asg_min_size = var.worker_count

asg_desired_capacity = var.worker_count

asg_max_size = var.worker_count

root_volume_size = 100

root_volume_type = "gp2"

ami_id = "${aws_ami_copy.eks_worker_1_13.id}"

key_name = var.worker_key_name

subnets = var.private_subnets

},

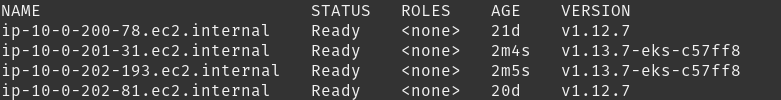

Run terraform apply and verify that a new IAM instance profile, launch configuration, and AutoScaling group will be created. Then wait for your AutoScaling group to have the desired capacity. You can check that the workers are created and ready by using kubectl get nodes. You should see workers with different versions of Kubernetes:

If you are using the Kubernetes Cluster Autoscaler, scale it down to avoid conflicts with scaling actions:

kubectl scale deployments/cluster-autoscaler --replicas=0 -n kube-system

Step 5: Drain your old nodes

Now that our new nodes are running, we need to move our pods to the new nodes. The first step is to use the name of your nodes returned from kubectl get nodes to run kubectl taint nodes on each old node to prevent new pods from being scheduled on them:

kubectl taint nodes ip-10-0-200-78.ec2.internal key=value:NoSchedule

kubectl taint nodes ip-10-0-202-81.ec2.internal key=value:NoSchedule

Now we will drain the old nodes and force the pods to move to new nodes. I recommend doing this one node at a time to ensure that everything goes smoothly, especially in a production cluster:

kubectl drain ip-10-0-200-78.ec2.internal --ignore-daemonsets --delete-local-data

You can check on the progress in-between drain calls to make sure that pods are being scheduled onto the new nodes successful by using kubectl get pods -o wide. If you know you have a sensitive workload, you can individually terminate pods to get them scheduled on the new nodes instead of using kubectl drain.

You may run into issues with StatefulSet pods. This is why it is important to ensure your new worker groups have the same config as your old ones. Any Persistent Volumes you had can only run in the same Availability Zone if they are backed by EBS, so the new workers need to run in the same AZs as the old ones.

Step 6: Clean up

Once you have confirmed that all non-DaemonSet pods are running on the new nodes, we can terminate your old worker group. Since the eks module uses an ordered array of worker group config objects in the worker_groups key, you cannot just delete the old config. Terraform will see this change and assume that the order must have changed and try to recreate the AutoScaling groups. Instead, we should recognize that this will not be the last time we will do an upgrade, and that empty AutoScaling groups are free. So we will keep the old worker group configuration and just run 0 capacity in it like so:

{

name = "workers-1.12"

instance_type = var.worker_instance_type

asg_min_size = 0

asg_desired_capacity = 0

asg_max_size = 0

root_volume_size = 100

root_volume_type = "gp2"

ami_id = "${aws_ami_copy.eks_worker_1_12.id}"

key_name = var.worker_key_name

subnets = var.private_subnets

},

Now the next time we upgrade, we can put the new config in this worker group and easily spin up workers without worrying about the terraform state. Finally, if you scaled down your cluster-autoscaler, you can revert those changes so that auto scaling works properly again.

Conclusion

Now you’ve successfully upgraded your Kubernetes cluster on EKS to a new version of Kubernetes, updated the kube-system components to newer versions, and rotated out your worker nodes to also be on the new version of Kubernetes.

We know it can be hard to monitor Kubernetes with traditional tools. If you are looking for a monitoring solution, consider Blue Matador. Blue Matador automatically checks for over 25 Kubernetes events out-of-the-box. We also monitor over 20 AWS services in conjunction with Kubernetes, providing full coverage for your entire production environment with no alert configuration or tuning required.