Since launching in 2014, AWS Lambda has dramatically grown in popularity. Lambda allows you to run code ![]() without having to manage the underlying compute resources. From a monitoring standpoint, you no longer have the need or ability to observe traditional performance metrics like CPU and memory.

without having to manage the underlying compute resources. From a monitoring standpoint, you no longer have the need or ability to observe traditional performance metrics like CPU and memory.

That doesn't mean you don't need to keep an eye on other things, though. So what metrics should you monitor for your Lambda functions? AWS CloudWatch exposes several metrics related to Lambda performance, but let's narrow it down to what's most important. In this blog, we'll list the seven most helpful metrics to monitor to make sure your Lambda instance is running smoothly.

| Blue Matador monitors all seven of these Lambda metrics out of the box—no manual setup required. Learn more about how we monitor serverless architectures > |

AWS Lambda errors

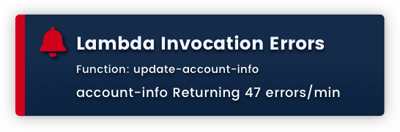

Lambda errors are caused by errors in your code. This number only counts the uncaught exceptions and runtime errors that your code didn’t anticipate, like an API timeout, bad type-casting, dividing by zero, or... so on and so forth. This is essentially a catchall for any reason your Lambda invocation didn’t successfully execute.

CloudWatch exposes the number of invocations that result in a function error. To address these errors, you’ll need to look at the Lambda logs to diagnose the issue.

AWS Lambda dead-letter errors

When services like SQS or DynamoDB send events to Lambda asynchronously, any event that fails twice will be sent to a configured dead-letter queue. However, if there are errors sending the event payload to the dead-letter queue, CloudWatch registers a dead-letter error.

If you fail to monitor these dead-letter errors, you won't be aware of the associated data loss. Dead-letter errors can occur due to permissions errors, misconfigured resources, or size limits.

AWS Lambda function duration

CloudWatch measures the time it takes to run each Lambda function. This is an important metric to monitor  because it affects billing, is an indicator of poor app performance, and is an early warning that your functions could be approaching the timeout you’ve configured (which will terminate your invocation). It’s important to look for durations approaching your timeout, because if a function actually times out, CloudWatch will not record the metric.

because it affects billing, is an indicator of poor app performance, and is an early warning that your functions could be approaching the timeout you’ve configured (which will terminate your invocation). It’s important to look for durations approaching your timeout, because if a function actually times out, CloudWatch will not record the metric.

An increase in function duration could be caused by either:

- recent changes in the function code that have made the function less efficient,

- a slowdown in your function’s dependencies, causing your function to wait on other resources, or

- a bad algorithm waiting for the wrong data set.

Again, look through the Lambda logs to diagnose this issue.

AWS Lambda function timeout

Timeouts can be very detrimental to your app’s performance. If the duration of your functions hits your pre-configured timeout, your invocation will terminate mid execution.

Unfortunately, CloudWatch doesn’t report this metric, so you’ll need to manually set up an alert with the timeout hardcoded (or monitor this automatically with Blue Matador).

If your duration is approaching a timeout, you should:

- Configure a higher timeout while you troubleshoot. The maximum function timeout is 300 seconds, but you should pick an amount of time where you are confident your function will not be terminated.

- Find the cause of the slowdown.

- Once you've fixed the slowdown and verified the fix, set the timeout back to its original value. If your function legitimately takes longer to execute, configure an appropriate new timeout.

AWS Lambda function invocations

Anytime a function is successfully invoked, CloudWatch records it in its invocation metric. (This does not include throttled attempts to run the function.) Because this is a metric that determines billing, it’s important to monitor so you can identify any major changes that could lead to a jump in costs.

If you're seeing a higher-than-expected rate of invocations, code that writes to the event source for your function may be malfunctioning and writing more to DynamoDB, Kinesis, S3, SQS, etc., or your function might be failing with errors that are causing retries and boosting your invocation count.

Anomalous increases and decreases in invocations are good leading indicators and can help with root cause analysis.

AWS Lambda function throttling

AWS limits the number of invocations that can occur at any one time. Each function can reserve a chunk of the account’s concurrency limit, thereby guaranteeing the function exactly that much concurrency. Otherwise, a function will run off the unreserved concurrency limit (if any is available).

When the concurrency limit is hit, Lambda will not invoke a function and will throttle it instead. You’ll want to monitor your functions for throttles so you know when you need to adjust your concurrency.

If you are running into Lambda throttling, we dedicated an entire post to how to troubleshoot this and strategies to avoid it going forward.

AWS Lambda iterator age

Lambda functions that have DynamoDB or Kinesis Streams as their event source read from those streams with an iterator. If DynamoDB is changed or records are put to Kinesis too rapidly, your function may not be able to read from the iterator quickly enough. This is reflected in the iterator age, which is the amount of time between when a record is written to the stream and when Lambda reads it.

Your iterator age could get behind due to a high volume of data in your stream or poor performance of your Lambda function.

If your iterator age gets too far behind, you could lose data. When this occurs, check whether the code triggering changes in your stream is erroneously doing so at an increased rate. If so, fix the code that’s causing the issues and just wait for Lambda to catch up. Otherwise, you increase the concurrency on your function so you can invoke your function more often to lower the iterator age.

Conclusion

Monitoring these seven key metrics for Lambda will ensure that your Lambda functions perform well. While it is possible to set up alerts for these metrics with a traditional monitoring tool, the process will be laborious and the result could be suboptimal. For instance, manually setting up an alert on the duration metric with the timeout hardcoded for each of your Lambda functions would be tedious and error-prone, or setting up an alert with a static threshold for spikes in your invocations could result in noise and false positives.

That’s why we recommend giving Blue Matador a try. Out-of-the-box, Blue Matador’s pre-configured alerts and statistical algorithms will automatically monitor these key metrics for your Lambda function in addition to hundreds of other events in your AWS environment. Started your 14-day trial and see how easy it is to monitor your Lambda functions with Blue Matador.